Understanding Job Scheduling and Its Evolution

Discover the first evolutionary step after the manual execution of jobs and tasks to learn how enterprise job scheduling has evolved.

Editor’s Note: Given the continued evolution of IT automation, we thought it timely to refresh our 2019 point of view on job scheduling.

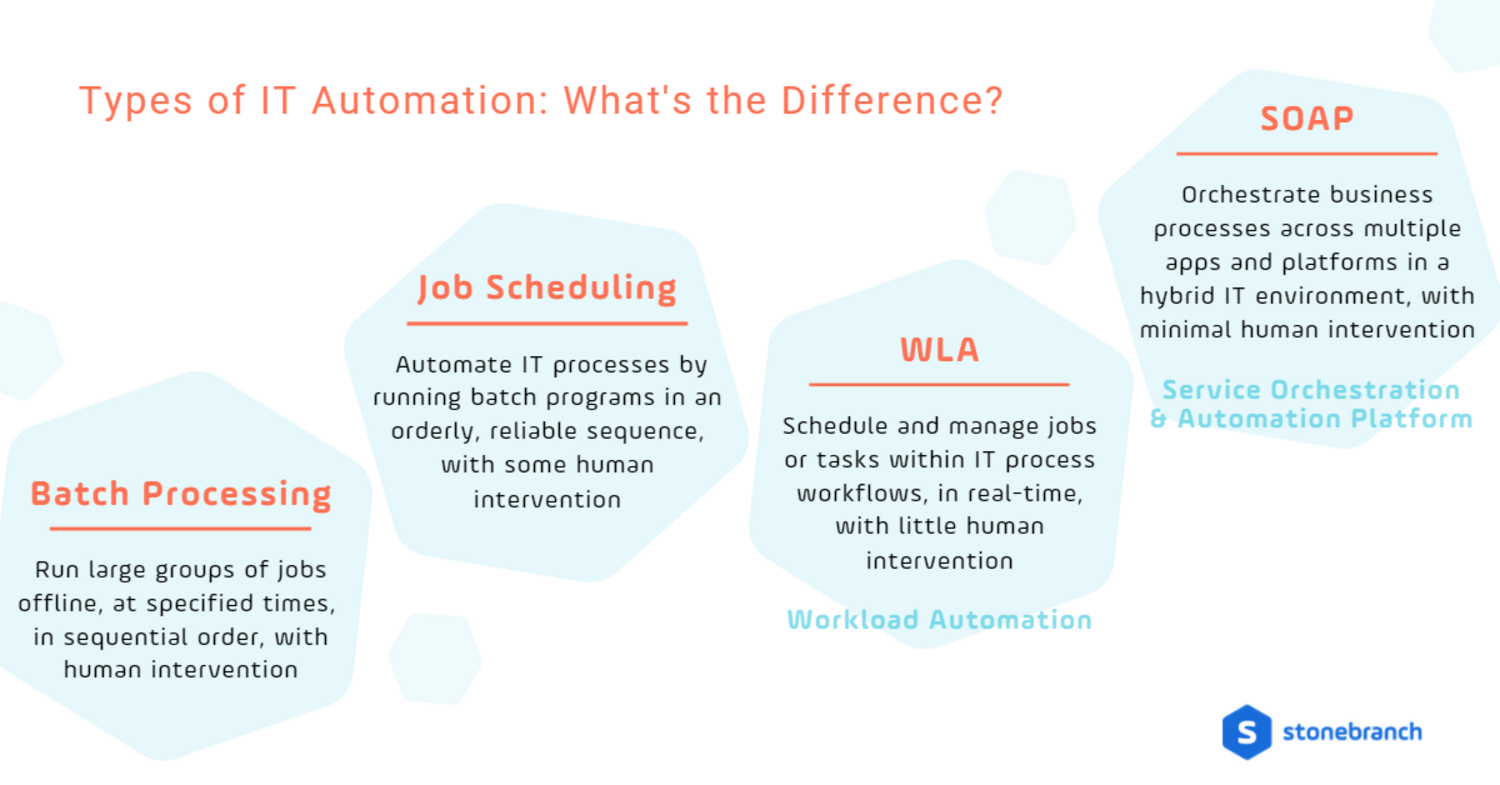

Job scheduling has been around for decades. In fact, many would consider it batch processing 2.0 — both run sequential automated jobs with minimal human interaction. Unlike batch processing, however, job schedulers can handle slightly more complex processes.

Job schedulers were originally designed as native, built-in tools to automate mainframes. Likewise, today’s schedulers are packaged within the scope of a single system, application, platform, or cloud service provider. These tools provide only a basic level of automation within their native systems. They cannot easily automate across systems or trigger event-based workflows.

Luckily, more advanced IT automation solutions exist to address these challenges and meet the needs of today’s businesses. This article reviews how job scheduling has been leveraged in the past and looks at how it’s being used in modern hybrid IT environments.

What is Job Scheduling?

Job scheduling is the orderly, reliable sequencing of batch processing automation, typically using calendars, dates, and times to schedule various business-critical processes. A typical example of job scheduling automation is when the IT Ops team schedules month-end financial reports to run the day after the finance team is required to close their monthly books.

What is a Job Scheduler?

Put simply, a job scheduler is a system-specific tool that relies on time-based triggers to automate batches of IT processes, such as database management.

Job schedulers are typically system- or application-specific tools that provide basic automation within their native system or app. Examples of native job schedulers include Windows Task Scheduler (Microsoft) and CRON (Linux and UNIX). These tools execute tasks within a batch window — a time set aside after-hours to run batch jobs. (Remember when “after hours” was still a thing?)

Automation administrators prevent unnecessary interventions by creating job scheduling packages that control how batch jobs are processed on a single system.

What are the Benefits and Challenges of Job Scheduling Software?

The most significant benefit of job scheduling software? It’s readily available to IT Ops teams. Because these tools are built right into the world’s most popular operating systems, they’re widely used throughout the IT automation community, despite significant drawbacks like:

- No business calendars

- No dependency checking between tasks

- No centralized management functions to control or monitor the overall workload

- No audit trail to verify jobs had run

- No automated restart/recovery of scheduled tasks

- No recovery from machine failure

- No flexibility in scheduling rules

- No ability to allow cross-platform dependencies

As data centers have shifted from mainframes to distributed systems environments to hybrid IT environments, these native scheduling tools have struggled to keep up.

Job Scheduling in a Distributed System Environment

In concept, the demands for distributed scheduling and automation are similar to those of mainframe-driven, time-based sequencing and dependencies models. However, the move to distributed computing has created new difficulties. For example, the lack of centralized management control becomes an issue when jobs run on many different servers, rather than on a single central computer.

As mentioned above, traditional job scheduling software generally runs jobs only on one machine. This one-machine limitation introduces several challenges for organizations, including:

- Silos: job schedulers generally don’t talk or work with each other across platforms. This lack of synchronization means that related mission-critical jobs on different operating systems might not run or might run out of sequence.

- Complexity: as maintaining and scheduling jobs grows more complicated, IT personnel need more time to perform their duties. Manually configuring and maintaining several schedulers on different operating systems increases complexity and potential errors.

- Manual intervention: job schedulers frequently require manual intervention to align scheduled processes across different machines, like when a file is sent via FTP to a second machine for processing.

- Extra programming: job scheduling frequently requires additional scripting or programming to fill processing gaps when coordinating between machines and operating systems.

- Missing links: traditional job schedulers do not have integrated or managed file transfer capabilities, which can stall the data supply chain until manual workarounds are configured.

- Rigidity: job scheduling functions best when each sequence of jobs begins and ends in a single platform. Therefore, moving jobs across different platforms can be tricky and causes new challenges when business processes inevitably change or evolve.

- System incompatibility: job schedulers often lack compatibility with systems management solutions, leading to frequent critical update issues, errors, and additional network maintenance.

- Visibility: although job schedulers are always accompanied by service level management agreements, running multiple schedulers on multiple machines or platforms dramatically limits the ability to evaluate run times accurately.

- Time-based: Job schedulers typically run batch processes at a specified time, often called time-based scheduling. However, today’s automation use cases require a real-time automation approach.

Distributed system environments require a more advanced form of enterprise job scheduling: workload automation (WLA). Like its predecessor, a WLA tool can run batch jobs at specified times (often nightly or monthly). It also runs jobs across distributed, on-prem environments based on events happening in real-time, such as specific data becoming available in SAP, a work request submittal via ServiceNow, or an approval granted via Teams or Slack.

Job Scheduling in a Hybrid Cloud or Hybrid IT Environment

Automating a hybrid IT environment that includes on-prem, cloud, and container-based systems presents many of the same challenges as on-prem distributed systems… on an even grander scale.

These complex environments require an automation solution beyond what’s offered by traditional job scheduling or even WLA tools. In 2020, Gartner introduced the category of service orchestration and automation platforms (SOAPs) to differentiate the latest advanced automation solutions that:

- Synchronize automation across hybrid IT environments comprised of on-premises systems, cloud service providers, and containerized workloads.

- Securely integrate and schedule jobs across multiple applications and technologies. Recent studies show the average company uses over 100 SaaS applications — many of which need to connect to enterprise data across a wide range of functions and tools.

- Enable users throughout the business — including developers, data experts, and business analysts — to create their own automation workflows.

Summary

Modern job scheduling and workload automation tools go by a new name: service orchestration and automation platform. This advanced approach offers all the benefits of traditional IT automation, while also synchronizing hybrid IT processes, orchestrating business services, and preparing IT teams for whatever comes next.

If you’re interested in taking your traditional job scheduling to the next level, read the Gartner’s 2024 Magic Quadrant for Service Orchestration and Automation Platforms (SOAP) or contact us directly.

Start Your Automation Initiative Now

Schedule a Live Demo with a Stonebranch Solution Expert