What is Batch Processing and How Has it Evolved?

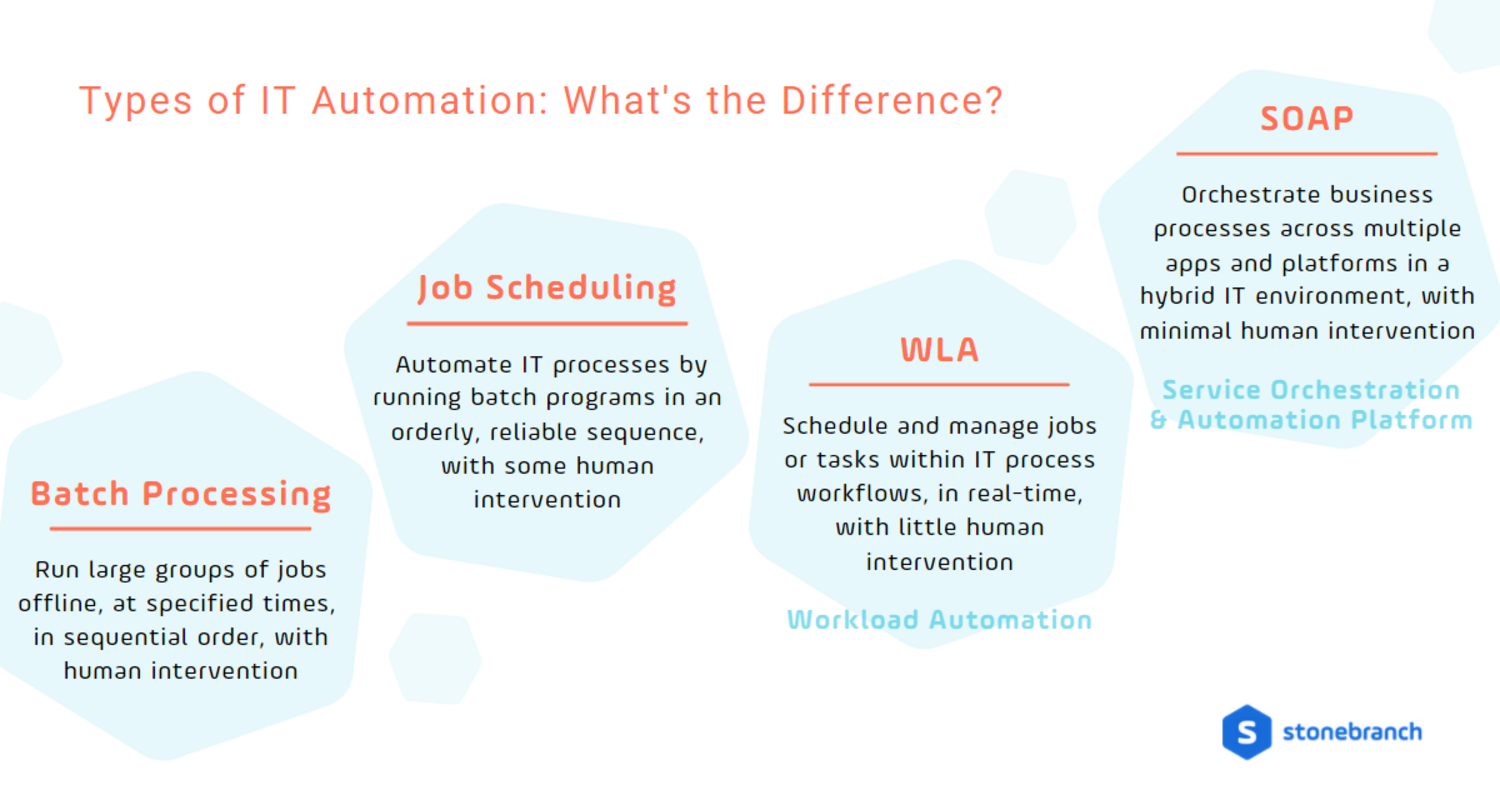

A quick guide to the history of batch processing and its modern successors in IT automation, such as job scheduling, workload automation, and service orchestration.

Editor’s Note: Given the continued evolution of IT automation, we thought it timely to edit and enhance our 2019 perspective on batch processing.

In batch processing, a computer automatically completes pre-defined tasks on large volumes of data, with minimal human interaction. The terminology dates back to the earliest days of computing when programmers would pile up stacks of punch cards to input the day’s data into a mainframe — each stack a batch to be processed.

Just as the definition of “minimal human interaction” has clearly evolved over the years, batch processing has advanced and inspired newer practices like job scheduling, workload automation, and service orchestration… though each has distinguishing characteristics. In this article, we’ll explore these nuances and see how batch processing fits into the modern landscape of IT automation that includes cloud computing, big data, artificial intelligence, and the internet of things.

The History of Batch Processing in IT Automation

In the early days of batch processing, data was collected over time, then automation was scheduled to run on the grouped data during a batch window — a nightly timeframe set aside for processing large amounts of data. Since all computing activities were carried out on mainframe computers, finding the best way to utilize this finite (and limited) resource was absolutely critical. Nightly batch windows freed up computer power during the day, and allowed end-users to run transactions without the system drag caused by high-volume batch processing.

IT operators submitted batch jobs based on an instruction book that told them what to do and how to handle certain conditions, such as when things went wrong. Jobs were typically scripted using job control language (JCL), a standard mainframe computer concept for defining how batch programs should be executed. For a mainframe computer, JCL:

- Identified the batch job submitter

- Defined what program to run

- Stated the locations of input and output

- Specified when a job was to be run

When the operator finished running a batch, they would submit another. Early batch processes were meant to bring some level of automation to these tasks. While the approach was effective at first, it quickly became problematic as the number of machines, jobs, and scheduling dependencies increased.

Batch Processing’s Place in IT Automation Today

As batch processing was developed long ago, its traditional form comes with limitations. This is especially true when compared to modern automation solutions that can conduct batch processes alongside real-time workflows in hybrid IT environments.

Among other things, the limitations of traditional batch processing systems include:

- Considerable manual intervention required to keep batches running consistently

- Inability to centralize workloads between different platforms or applications

- Lack of an audit trail to verify the completion of jobs

- Rigid, time-based scheduling rules that don't account for modern, real-time data approaches

- No automated restart/recovery of scheduled tasks that fail

Today’s IT automation solutions still enable batch processing for information that doesn’t need to be managed in real-time, such as routine business processes, monthly financial statements, and employee payrolls. However, the need for more immediate data is growing.

Modern automation solutions offer flexibility to use:

- Micro-batch processing, which executes tasks on smaller groups of data. Instead of waiting until the end of the day (or longer) to run a batch, micro-batches might run every few seconds or minutes — quickly approaching real-time processing.

- Event-based processing, which executes tasks based on transactional triggers across a variety of systems, platforms, or applications.

The most common of these more advanced automation solutions include job schedulers, workload automation (WLA) applications, and service orchestration and automation platforms (SOAPs).

What's the Difference?

What’s the Difference Between Batch Processing and Job Scheduling?

Think of job scheduling as batch processing 2.0 — they both automatically run sequential tasks on big chunks of data with minimal human interaction. However, a job scheduling tool can execute more complex processes based on calendar entries, dates, and times. Additionally, job scheduling is efficient enough to run these processes in the background during business hours or wait until after hours, based on the job’s required processing power.

In this iteration, “minimal human interaction” means that an IT administrator must monitor the scheduling tool to manually intercept tasks that are destined to fail (or have already). For example, if a recurring payroll process happens to fall on a weekend or holiday, the IT administrator has to intervene to redirect the process so employees can be paid on time.

Job scheduling tools tend to be natively built-in to an operating system — think Windows Task Scheduler for Microsoft, CRON scheduler for Linux/UNIX, or Advanced Job Scheduler for IBM.

But what happens when you need to run cross-platform jobs? Or if you want to trigger a workflow based on an event, such as a ticket submitted via ServiceNow? Workload automation, the next iteration of IT automation addresses some of these shortcomings.

What’s the Difference Between Batch Processing and Workload Automation?

You might think that WLA would simply be batch processing 3.0, and it is, sort of. It easily manages traditional time-based, single-platform batch processes and job schedules. It can even centrally control the native built-in schedulers available in each platform.

But WLA has additional capabilities. These tools are distinguishable by their ability to execute workflows based on specific business processes and transactions, across multiple on-premises systems. They also use secure API connections to monitor and automate tasks in third-party applications. WLA workflows respond in real-time to events like:

- specific data becoming available in SAP or Informatica

- a new employee added to PeopleSoft

- a work request submitted via ServiceNow

- an approval granted via Teams or Slack

Introduced by Gartner in their 2005 Hype Cycle for IT Operations Management report, WLA brought a new level of flexibility to IT automation… for a time. As new trends emerged and gained popularity — cloud computing, big data, artificial intelligence, and the internet of things, to name a few — IT leaders again sought out more advanced solutions.

What’s the Difference Between Batch Processing and Service Orchestration?

Service orchestration and automation platforms (SOAPs) represent the collective evolution of batch processing, job scheduling, and workload automation — plus some future-forward innovation. These platforms easily conduct traditional calendar- and event-based IT task automations, whether transactional or batched. In addition, SOAPs orchestrate business services by:

- Synchronizing automation across hybrid IT environments spanning on-premises systems, cloud service providers, and containerized microservices.

- Integrating with third-party technologies — cloud service providers, ETL and ELT tools, ERPs, containers, and applications like Salesforce, Slack, and Teams — to coordinate and automate the flow of data across disparate functions and platforms.

- Empowering users of all kinds, from DevOps to DataOps to business analysts, with intuitive do-it-yourself workflow creation and role-appropriate access permissions.

- Standardizing business and IT processes across the enterprise to improve functionality and cost savings.

Gartner introduced the SOAP category in 2020 in recognition of the significant advancement in IT automation these platforms represent. Gartner predicts that “By year end 2024, 80% of organizations currently delivering workload automation will be using service orchestration and automation platforms to orchestrate cross-domain workloads.”

In Summary

Batch processing has come a long way, yet it still has a place in modern IT automation. Advanced automation platforms like SOAPs can easily accommodate the time-based scheduling of tasks for batch and micro-batch processing. Additionally, SOAPs enable event-based processing, business service orchestration, and hybrid IT synchronization.

Want to learn more? Check out Gartner’s 2023 Market Guide for Service Orchestration Platforms.

Start Your Automation Initiative Now

Schedule a Live Demo with a Stonebranch Solution Expert