Serverless Containers, AI Workloads, and Edge: Why Container Management is Going Mainstream

Explore Gartner-backed predictions about the rise of serverless containers, AI workloads, and edge computing. Learn how Stonebranch helps automate container orchestration in hybrid IT environments.

The container revolution has entered the mainstream of enterprise infrastructure and operations (I&O) strategy. What began as a developer-first movement is now reshaping I&O across nearly every industry. As IT leaders pursue speed, resilience, and cost-efficiency, container management has become a critical success factor.

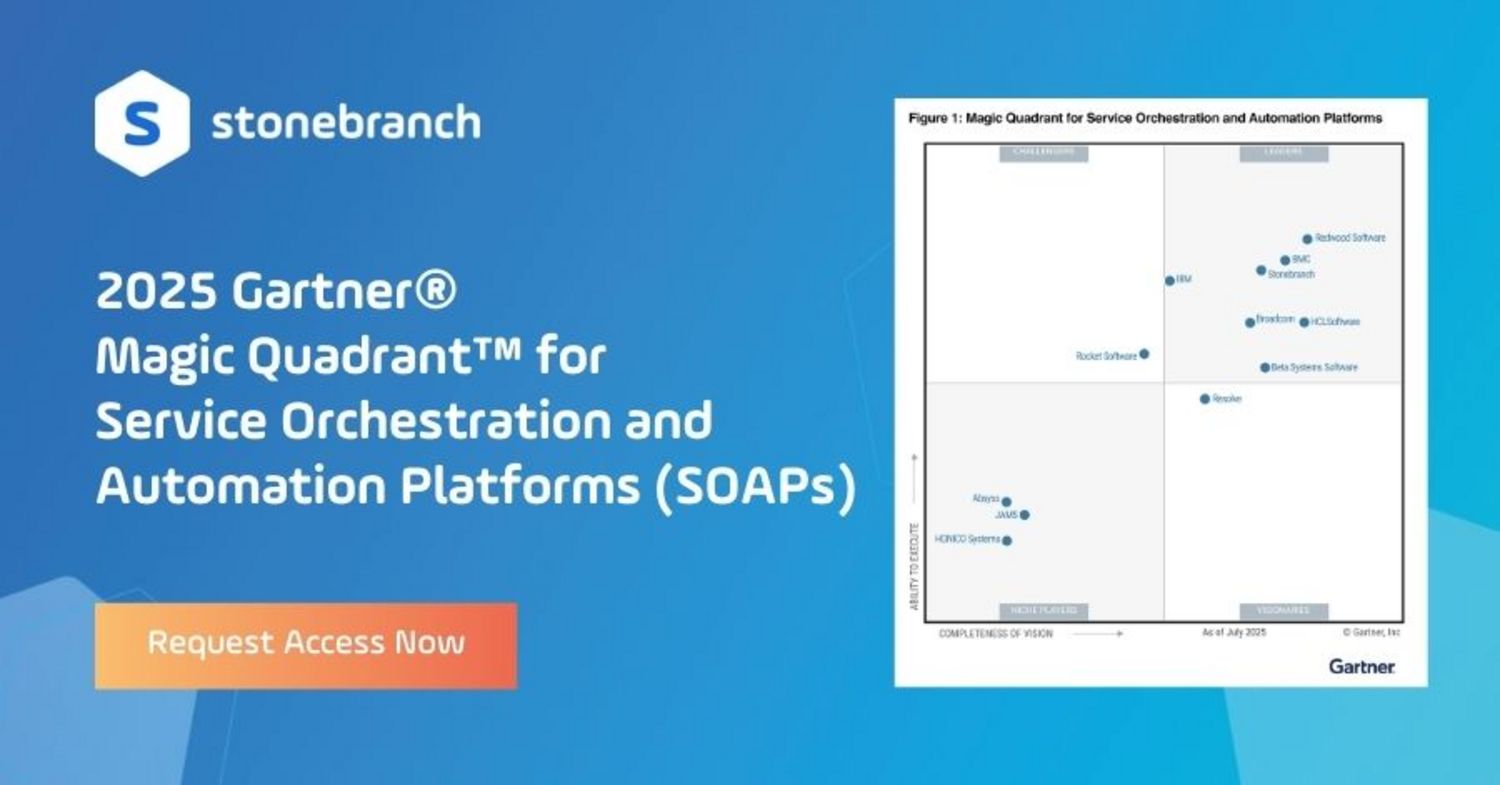

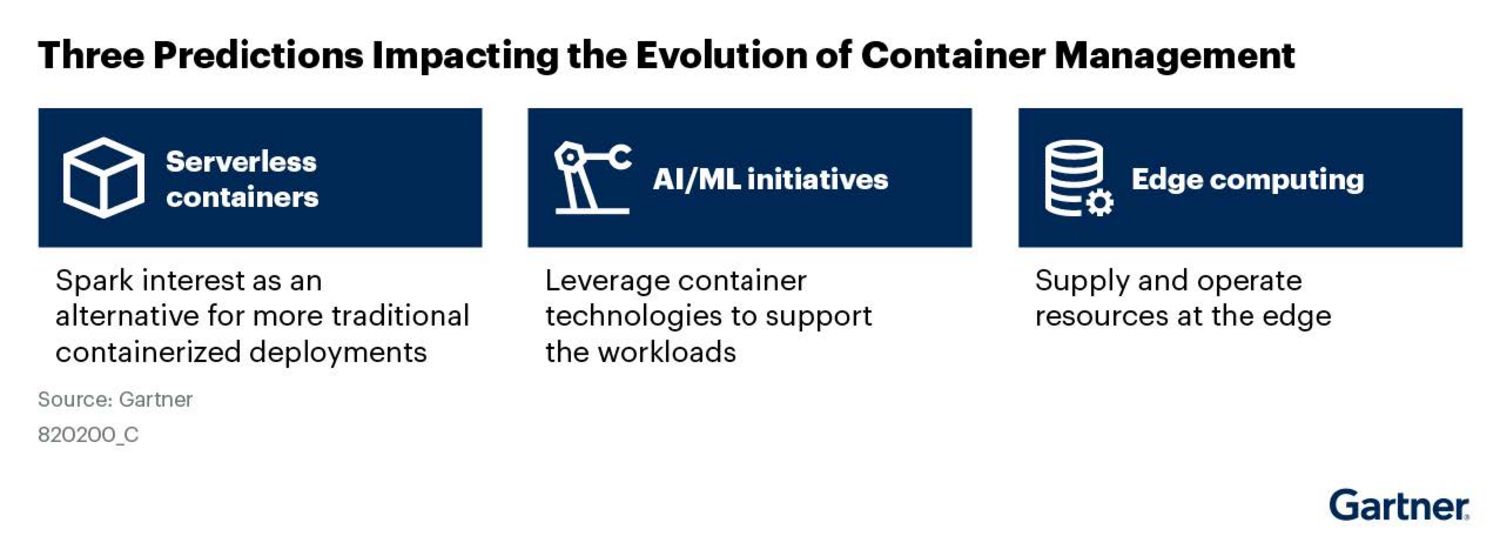

The Gartner Predicts 2025: Container Management Goes Mainstream report expects:

- More than 50% of all container deployments will use serverless container management services (up from <25% in 2024)

- Over 75% of AI/ML workloads will run in containers

- 80% of edge applications will be deployed using containers by 2028

This post breaks down Gartner’s predictions and explains how Stonebranch can help you automate container workloads in hybrid, cloud-native, and edge environments.

Insight #1: Serverless Container Management Will Become the Norm

“By 2027, more than half of all container management deployments will involve serverless container management services.” — Gartner

Gartner predicts a massive shift away from traditional container orchestration tools like self-managed Kubernetes in favor of serverless container services. This cloud-native deployment model makes it possible to run containerized applications without having to manage the underlying infrastructure (i.e., servers, virtual machines, or Kubernetes clusters). It combines the flexibility of containers with the simplicity of serverless computing.

Why this matters:

- Organizations want speed to market.

- Teams lack deep Kubernetes expertise.

- Managing container nodes adds unnecessary overhead.

Why Organizations Are Re-Evaluating Legacy Virtualization Platforms

Rising costs, vendor consolidation, and changing application needs are pushing enterprises to rethink their infrastructure. Instead of sticking with traditional virtualization platforms like VMware, IT leaders are turning to flexible, cloud-native options.

This shift goes beyond the typical lift-and-shift migration. Enterprises are choosing to re-architect legacy applications — or replace them entirely — with solutions designed to run in containerized, orchestrated environments. These platforms offer greater agility, scalability, and alignment with DevOps and platform engineering models.

How Stonebranch Supports Serverless Container Management

Stonebranch enables serverless orchestration through prebuilt integrations with major platforms. A standout example is the Google Kubernetes Engine (GKE) job task, which allows you to run tasks in the GKE environment without dealing with complicated pod setups or YAML code.

With Universal Automation Center (UAC), platform teams can:

- Schedule containerized workloads across cloud-native environments.

- Integrate orchestration into broader workflows.

- Enable DevOps self-service without exposing backend infrastructure.

Insight #2: AI/ML Workloads Will Run Primarily in Containers

“By 2027, more than 75% of all AI/ML deployments will use container technology.” — Gartner

AI/ML workloads are exploding in volume and complexity. Gartner highlights containers as the ideal environment for AI pipelines because of their:

- Scalability and elasticity

- Ease of provisioning dev environments

- Ability to scale compute for model training

Yet many data scientists and AI/ML teams lack experience with Kubernetes and DevOps. This creates a growing need for MLOps and platform engineering teams who can enable containerized AI workflows — while shielding citizen automators from infrastructure complexity.

How to Orchestrate AI/ML Workloads with Stonebranch

Stonebranch acts as a control plane for container-based AI pipeline orchestration that integrates into the broader IT ecosystem. With UAC, IT Ops and data platform teams can:

- Automate training and inference across cloud-based containers

- Trigger workloads based on time, performance, or external system events

- Manage and monitor jobs in a unified orchestration layer

This end-to-end orchestration ensures AI operations (AIOps) scale with minimal friction, whether you’re running in the cloud, at the edge, or on-prem.

Insight #3: Containers Will Power Edge Deployments

“By 2028, 80% of custom software running at the physical edge will be deployed in containers.” — Gartner

Edge computing is booming, especially in industries like retail, manufacturing, and logistics. Organizations need to place compute resources close to users and machines for real-time processing and localized data handling.

Containers are ideally suited for edge environments since they offer::

- Lightweight deployment models

- Fast boot times

- Consistent runtime environments across varied hardware

Gartner also notes the growing importance of cluster fleet management to scale containers across distributed environments.

How Stonebranch Approaches Edge Orchestration

Stonebranch enables edge orchestration with:

- Zero-touch infrastructure deployment using GitOps-style pipelines.

- Policy-based automation that ensures consistent configuration across remote nodes.

- Unified management across multicloud, hybrid, and edge environments.

With UAC, edge automation becomes part of the broader IT strategy, rather than a siloed outlier.

Final Thought: Move Beyond Task Automation

With serverless models simplifying operations, AI driving demand, and edge computing expanding the enterprise footprint, IT teams must adopt automation tools built for this new reality.

Automation is no longer just about individual tasks. It’s about orchestrating how everything works together. Whether you’re modernizing infrastructure, supporting new predictive analysis initiatives, or monitoring equipment across global locations, Stonebranch helps you build a connected, scalable automation approach that fits your enterprise.

Start Your Automation Initiative Now

Schedule a Live Demo with a Stonebranch Solution Expert