What is a DataOps Tool: Five Core Capabilities

Discover how DataOps tools streamline data operations with automation, orchestration, and observability — accelerating insights and improving collaboration across the modern data pipeline.

DataOps tools are gaining serious momentum. What began as a methodology has evolved into a critical technology category. In fact, the 2025 Gartner® Market Guide for DataOps Tools marks the fourth edition of the report, offering the most detailed guidance yet for data and analytics (D&A) leaders. This guide outlines the key capabilities that define the category and explores how these tools support the automation and orchestration of the entire data analytics pipeline.

What is a DataOps Tool?

DataOps tools help teams work better together by making it easier to manage and deliver data. They connect the people who manage data with those who use it, automate key steps in the data process, and speed up the delivery of insights that drive business results.

“DataOps tools address inefficiencies and misalignments between data management and data consumption by streamlining data delivery, aligning cross-functional data team personas, and operationalizing key data artifacts, including processes, pipelines, data assets, and platforms. This integrated approach accelerates the delivery of business value by enabling faster, more reliable, and outcome-driven data operations.”

— 2025 Gartner Market Guide for DataOps Tools

For data and analytics (D&A) leaders who want to boost operational excellence and productivity, Gartner recommends using a DataOps tool to combine data management tasks handled by multiple technologies into a comprehensive data pipeline lifecycle.

Who is DataOps for?

While the primary buyers of DataOps tools are D&A leaders — such as chief data and analytics officers (CDAOs) — the primary users are data managers and data consumers:

- Data managers include data engineers, operations engineers, database administrators, and data architects

- Data consumers include business analysts, business intelligence developers, data scientists, and business technologists (line-of-business users who are domain experts).

DataOps tools give a unified experience to both data managers and consumers to drive productivity and operational excellence.

“Technology silos impede organizations from delivering trusted, secure, high-quality, and consumable data on time. DataOps tools reduce friction points through data pipeline orchestration, automation, and observability in complex environments."

— 2025 Gartner Market Guide for DataOps Tools

Here at Stonebranch, we also see interest in DataOps from traditional IT Ops leadership, in addition to D&A leaders. The driver is to deliver automation as a service. When IT Ops is involved, it’s typically in partnership with the data teams. In this scenario, IT Ops gains visibility and governance, while data teams are empowered to focus on innovation.

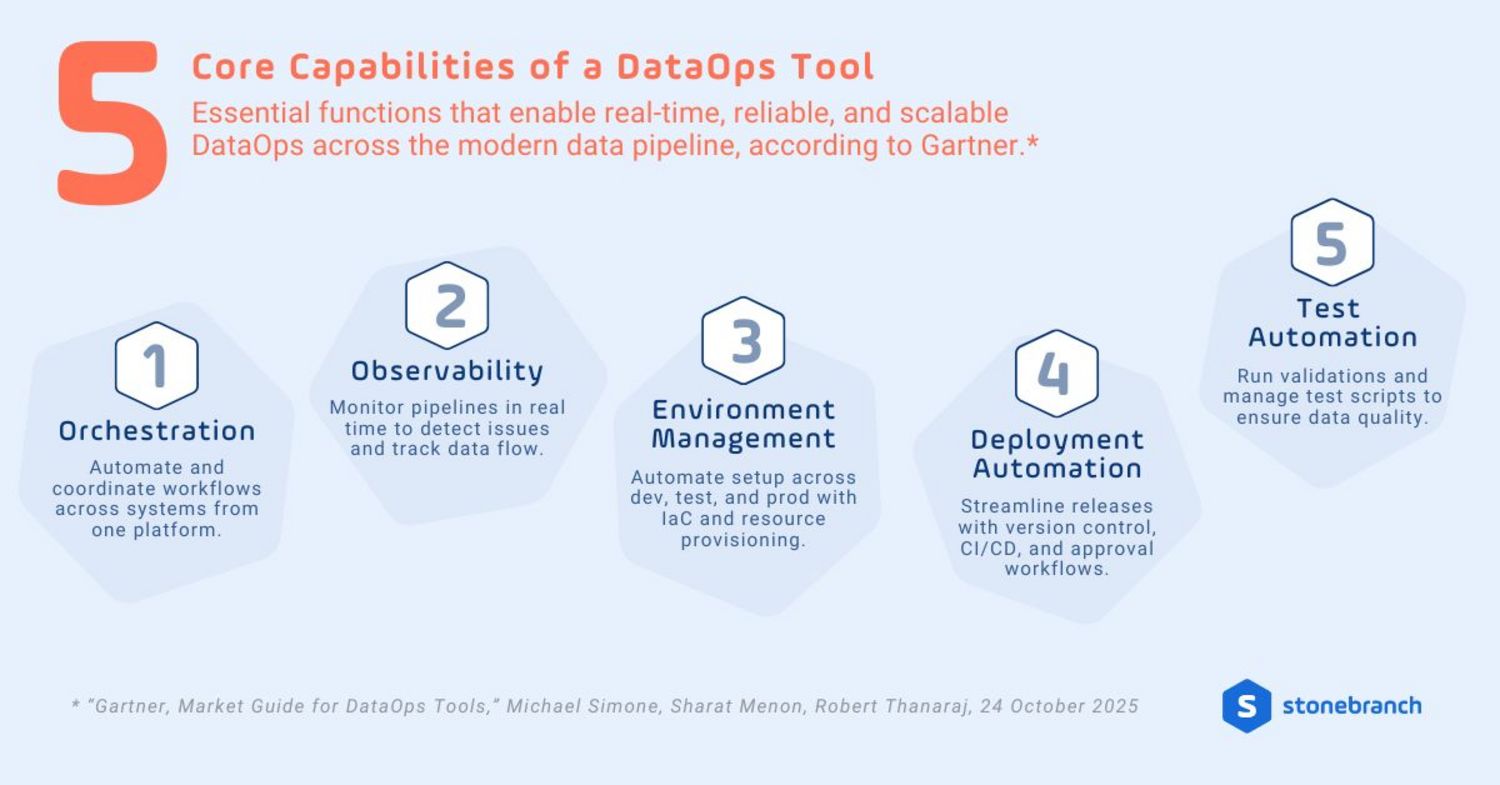

The 5 Core Capabilities of a DataOps Tool, According to Gartner*

DataOps fosters powerful automation and agility throughout the full data transformation lifecycle. Five key features unite all the best DataOps tools:

- Orchestration: coordinate and automate data workflows from a central place, helping teams manage complex pipelines more easily by connecting systems, scheduling tasks, and tracking activity.

- Observability: keep an eye on data pipelines to detect issues, monitor performance, track data flow, and flag anomalies using real-time and historical data.

- Environment Management: reduce manual setup by using templates and automation to manage development, testing, and production environments. This covers infrastructure-as-code (IaC), resource provisioning, and credential management to ensure each pipeline runs the same way across every stage.

- Deployment Automation: manage version control, DevOps integration, pipeline releases, and approvals to make it easier to update and roll out changes using agile CI/CD (continuous improvement / continuous delivery) practices.

- Test Automation: runs test versions of pipelines to check for errors. It validates business rules, manages test scripts, and ensures data quality before moving to production.

Each of these capabilities is designed to streamline real-time data operations and accelerate success for D&A teams. Orchestration and observability are called out as must-have DataOps frameworks to enable the end-to-end flow of data.

New for 2025: The Rise of AI-Augmented DataOps

Gartner calls out a major evolution in the 2025 guide: the emergence of AI-augmented DataOps. These platforms go beyond basic automation tools by embedding intelligence that helps DataOps teams operate faster and more effectively — with less manual effort.

AI features and machine learning models are becoming a key differentiator. Leading platforms are beginning to offer:

- Proactive issue detection based on live and historical metadata

- AI-driven optimization that recommends workflow improvements

- Predictive analytics to catch data quality issues before they escalate

- Dynamic workload balancing across hybrid and multi-cloud infrastructures

While these capabilities are still maturing, Gartner sees them as foundational for the future of DataOps. Tools that integrate AI into their orchestration and observability layers will empower data teams to become more proactive, responsive, and scalable — especially as demand for real-time, trusted data continues to grow.

Putting the Ops in DataOps with Stonebranch

DataOps implementation has grown far beyond basic scheduling and task automation. Today, it’s about smart orchestration, AI-guided decisions, and full visibility across your data environment.

Organizations need to move fast — and with confidence. That means delivering data that’s not just on time, but also reliable, governed, and AI-ready. To do that, modern DataOps platforms must work across hybrid infrastructures, automate complex workflows, and provide real-time insights to help teams stay ahead.

Stonebranch is recognized in the 2025 Gartner Market Guide for DataOps Tools as a representative vendor in this growing category. Our approach is built to meet these evolving needs, powered by two tightly integrated solutions:

- Universal Automation Center (UAC): A hybrid orchestration platform built to centralize control of data pipelines across on-premises, cloud, and containerized environments. UAC supports event-based triggers, CI/CD workflows, and AI-embedded orchestration to reduce manual intervention. The platform easily integrates with data pipeline-centric tools, including ETL tools, as well as data lake, data warehouse, and visualization tools.

- Universal Data Mover Gateway (UDMG): An enterprise-grade, B2B managed file transfer (MFT) solution that integrates tightly with data pipelines and governance policies, ensuring the secure movement of business-critical data across organizational boundaries.

Together, UAC and UDMG give D&A teams a unified platform to automate, observe, and optimize every phase of the data lifecycle — from ingestion and transformation to delivery and monitoring. Built-in support for infrastructure-as-code (IaC), AI-driven observability, and agentic coordination patterns ensures that operations scale reliably even as environments grow more complex.

UAC is designed for today’s challenges... and for whatever comes next. To learn more, browse our customer success stories or contact the Stonebranch sales team to request a demonstration of the platform.

* “Gartner, Market Guide for DataOps Tools,” Michael Simone, Sharat Menon, and Robert Thanaraj, 24 October 2025.

Frequently Asked Questions

What is a DataOps Tool?

A DataOps tool is a software solution designed to streamline and enhance the data operations processes within an organization. It facilitates collaboration between data teams, including DataOps engineers, data analysts, and data science professionals, by automating workflows and integrating various data sources. As the demand for real-time data analytics and big data solutions increases, the decision to implement DataOps strategy — as well as a robust DataOps platform — becomes critical for organizations aiming to harness their data assets efficiently.

What are the Five Critical Data Capabilities of DataOps Tools?

The five critical capabilities of DataOps tools include data pipeline orchestration, observability, environment management, deployment automation, and test automation. These capabilities enable organizations to manage their data volume effectively, ensuring high data quality and seamless data flow across various data environments. Each capability plays a vital role in applying DataOps practices and enhancing overall data management.

How Does Data Quality Affect DataOps?

Data quality is a cornerstone of successful DataOps principles and best practices. Poor data quality can lead to inaccurate data analysis and flawed decision-making. Organizations must focus on implementing data validation techniques and data profiling tools to ensure the data product entering into their data pipelines meets quality standards from the moment of data ingestion. This minimizes risks and boosts confidence in data analytics outcomes.

What Role Does Data Integration Play in DataOps?

Data integration is crucial for unifying various raw data sources into a cohesive system. Organizations will need data integration tools that can handle complex data environments, allowing for seamless merging of data assets from cloud data, data lakes, and data warehouses. This capability enables real-time data access and enhances overall data management.

Start Your Automation Initiative Now

Schedule a Live Demo with a Stonebranch Solution Expert