Databricks: Automate Jobs and Clusters

Databricks: Automate Jobs and Clusters

Product information "Databricks: Automate Jobs and Clusters"

This integration allows users to perform end-to-end orchestration and automation of jobs and clusters in Databricks environment either in AWS or Azure.

Key Features:

- Uses Python module requests to make REST API calls to the Databricks environment.

- Uses the Databricks URL and the user bearer token to connect with the Databricks environment.

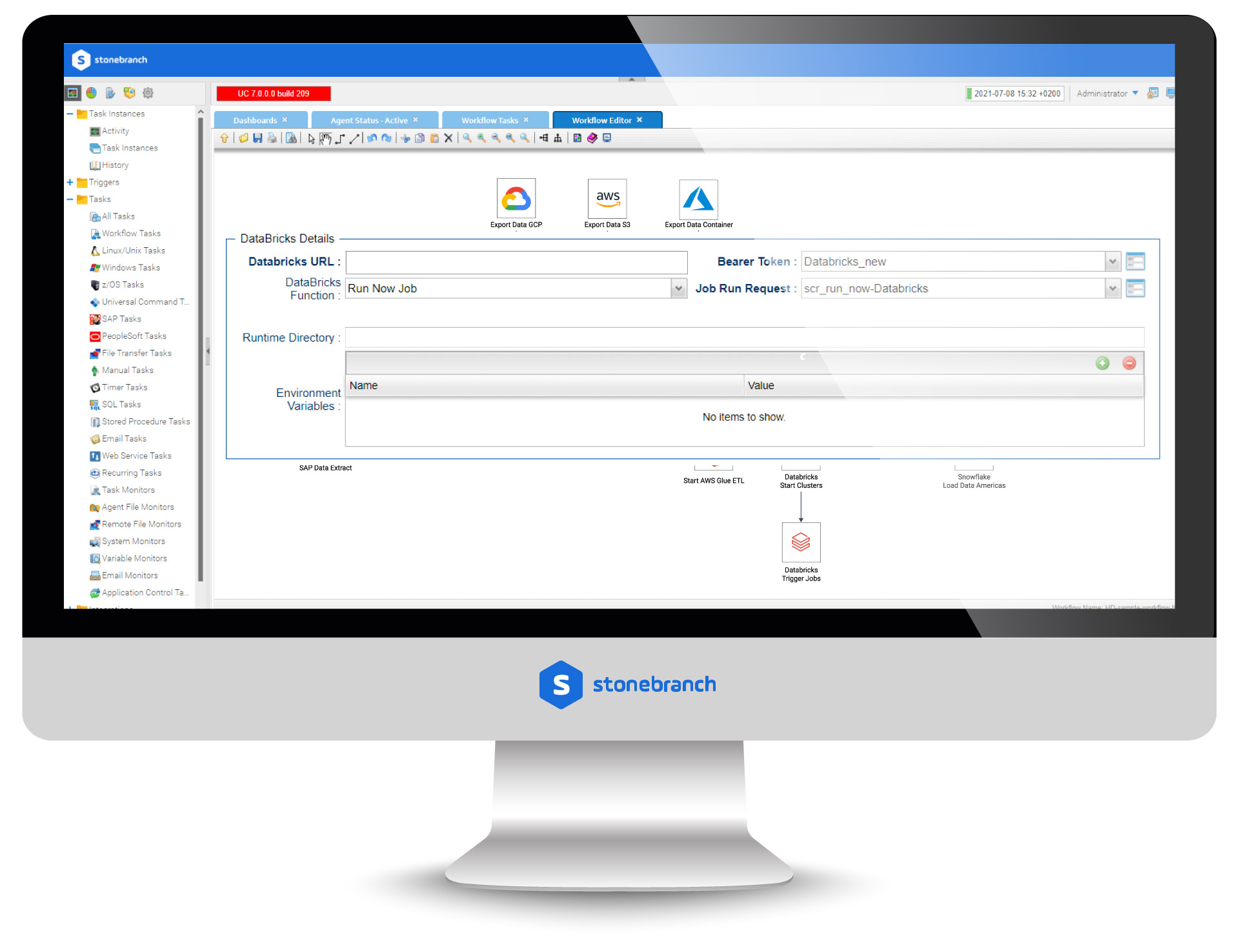

- With respect to Databricks jobs, this integration can perform the below operations:

- Create and list jobs.

- Get job details.

- Run new jobs.

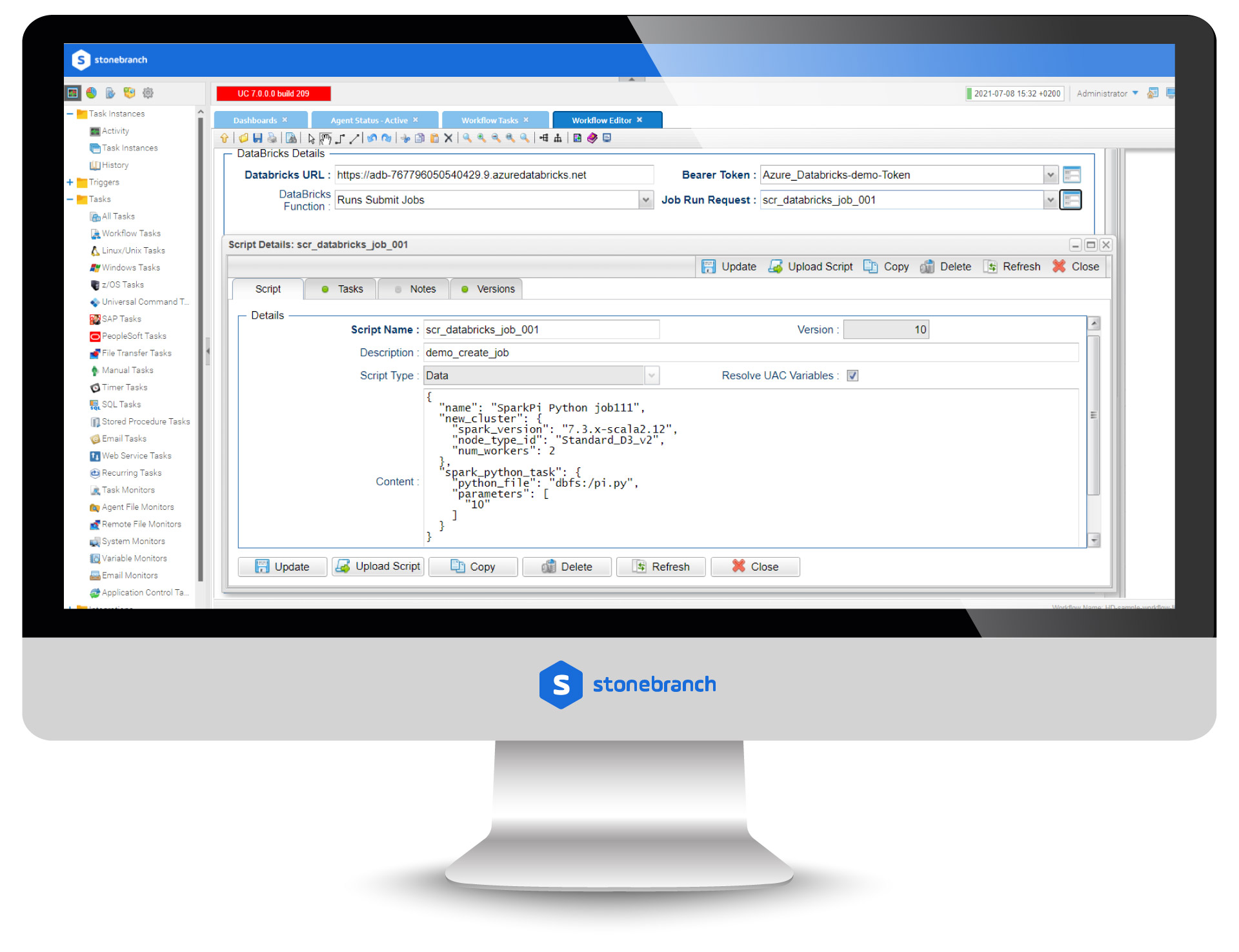

- Run submit jobs.

- Cancel run jobs.

- With respect to the Databricks cluster, this integration can perform the below operations:

- Create, start, and restart a cluster.

- Terminate a cluster.

- Get a cluster-info.

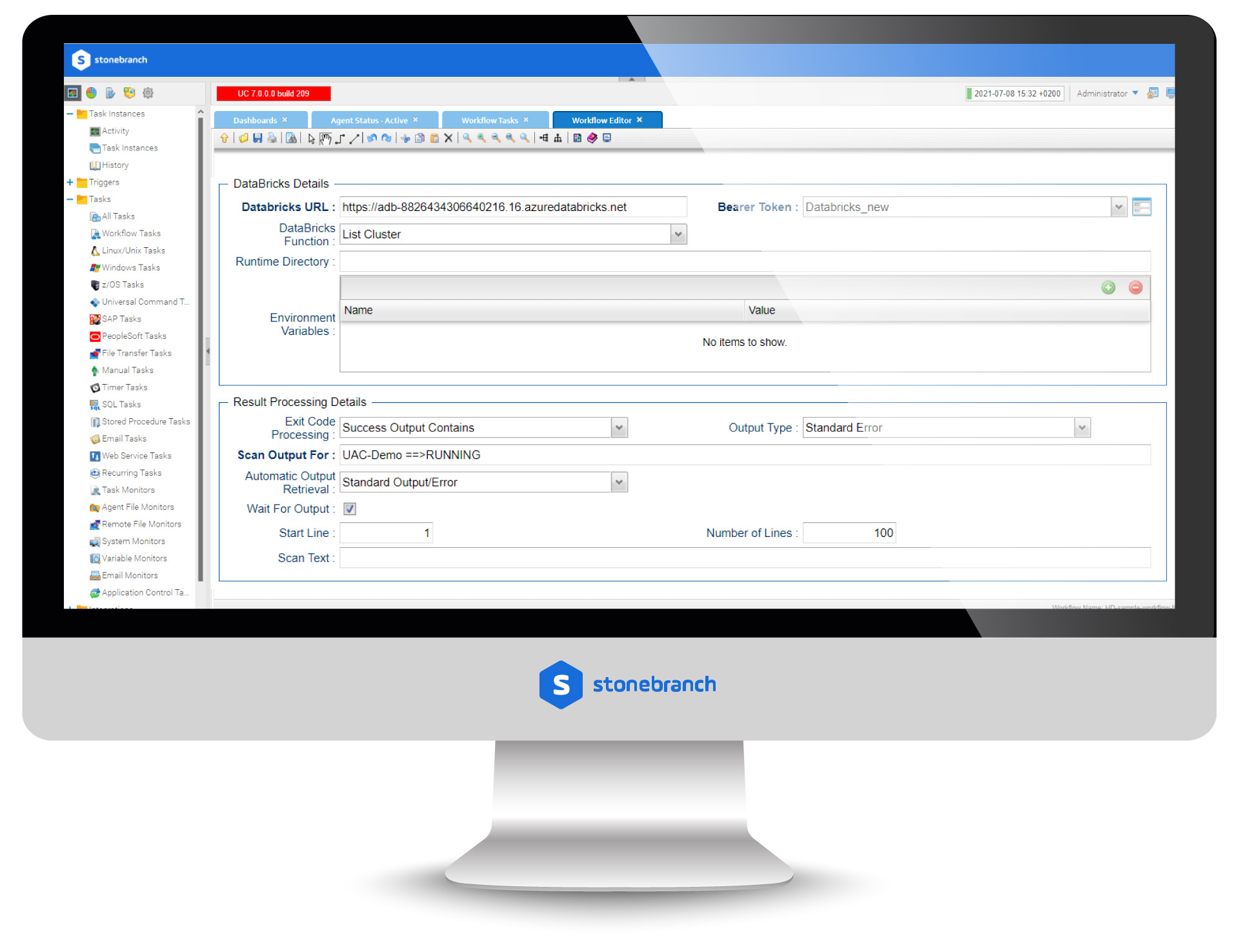

- List clusters.

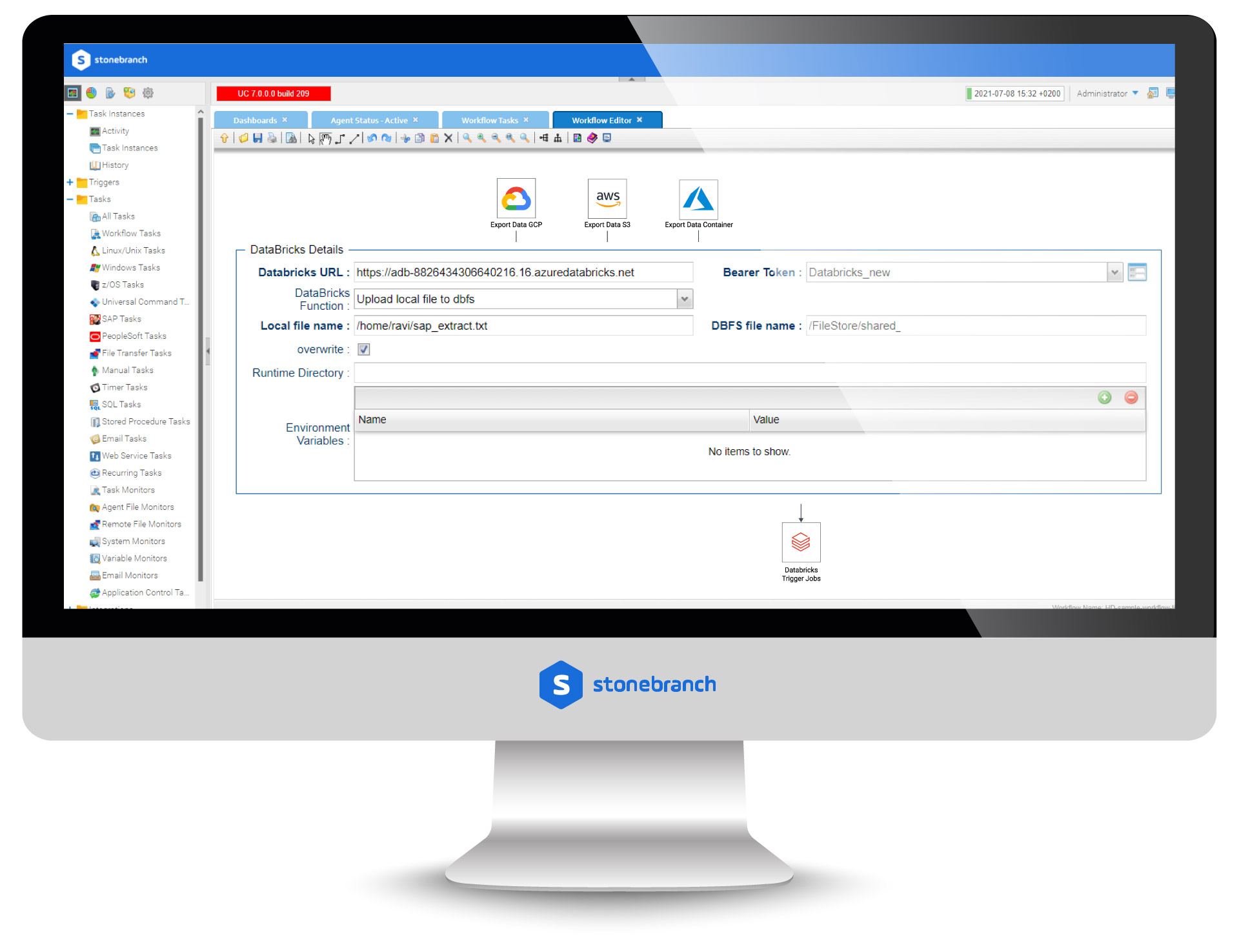

- With respect to Databricks DBFS, this integration also provides a feature to upload files larger files.

What's New in V1.4.0

Enhancements

- Added: repair_job option to support repairing failed or incomplete jobs.

- Added: Support for Databricks multi-task run job outputs in Run Job.

- Added: repair parameter for run_now and run_submit functions to enable on-demand repair.

- Added: Enhanced Databricks job logging for improved observability and debugging.

Fixes

- Fixed: Minor issues with Retry Interval and Max Retry configurations.

- Fixed: Refactored large functions into smaller, modular units to improve code quality, maintainability, and readability.

| Product Component: | Universal Agent, Universal Controller |

|---|---|

| Universal Template Name: | DataBricks |

| Version: | 1.4.0 |

| Vendor: | Databricks |

| Type: | Free |

| Compatibility : | UC/UA 7.1 and above |

| Support: | Community Created |