Blog Post MLOps and Automation for Machine Learning Pipelines

Learn how to implement MLOps and automate machine learning pipelines for deploying ML models to production. Discover the importance and best practices of machine learning operations.

The buzz of artificial intelligence (AI) has swept across the enterprise, igniting the imagination of executives and inspiring teams to build new machine learning (ML) models. These ML models drive how AI systems analyze and interpret data — and learn, adapt, and make predictions based on that data.

But the journey from shiny ML prototype to AI impact is often fraught with frustration. Models languish in development purgatory, their potential trapped in a tangled mess of manual processes and siloed workflows. This is the deployment gap, the chasm that separates experimentation from execution.

This is also where MLOps comes in. By automating machine learning pipelines, MLOps helps operationalize model integration for optimal performance, paving the way to unleash innovation at scale.

In this blog, we'll delve into the significance of MLOps, share compelling statistics, and explore MLOps pipeline automation.

The Rise of MLOps: Navigating the Complexities

MLOps is the convergence of machine learning (ML) and operations (Ops). It aims to streamline the entire ML lifecycle — from model development and training to deployment and monitoring. The goal is to enhance collaboration and communication among data scientists, machine learning engineers, and operations teams, ultimately accelerating the delivery of high-quality ML applications.

According to Gartner, "By 2027, the productivity value of AI will be recognized as a primary economic indicator of national power, largely due to widespread gains in workforce productivity." If this prediction holds true, enterprises unable to operationalize their ML pipelines will find it difficult to keep up with the productivity gains achieved by the enterprises that do.

Understanding the MLOps Pipeline

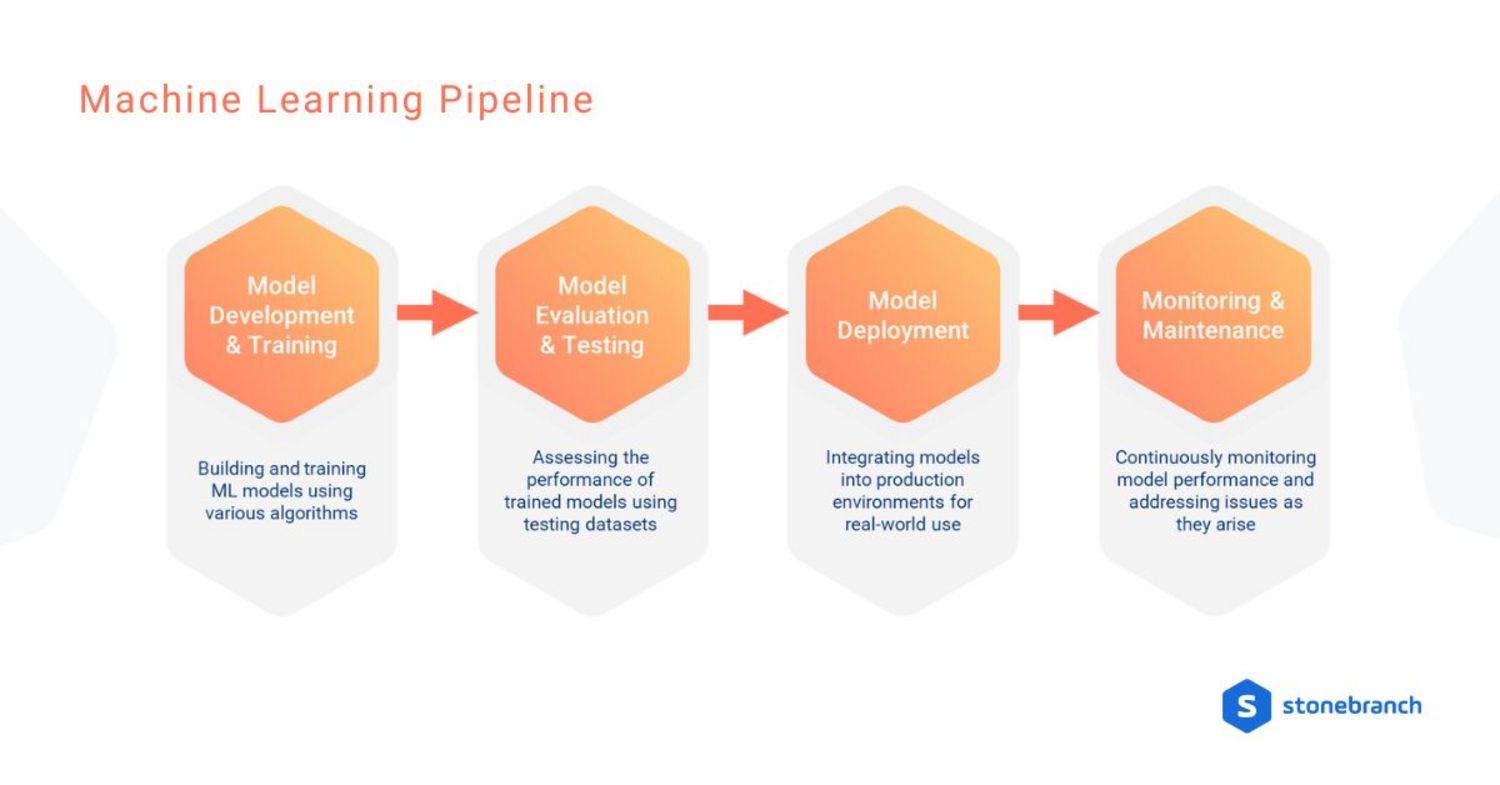

A crucial component of MLOps is the ML pipeline — a set of processes that automate and streamline the flow of ML models from development to deployment. This pipeline typically consists of the following four stages:

- Model Development and Model Training: Building and training machine learning models using various algorithms.

- Model Evaluation and Testing: Assessing the performance of trained models using testing datasets.

- Model Deployment: Integrating models into production environments for real-world use.

- Monitoring and Maintenance: Continuous monitoring of model performance and addressing issues as they arise.

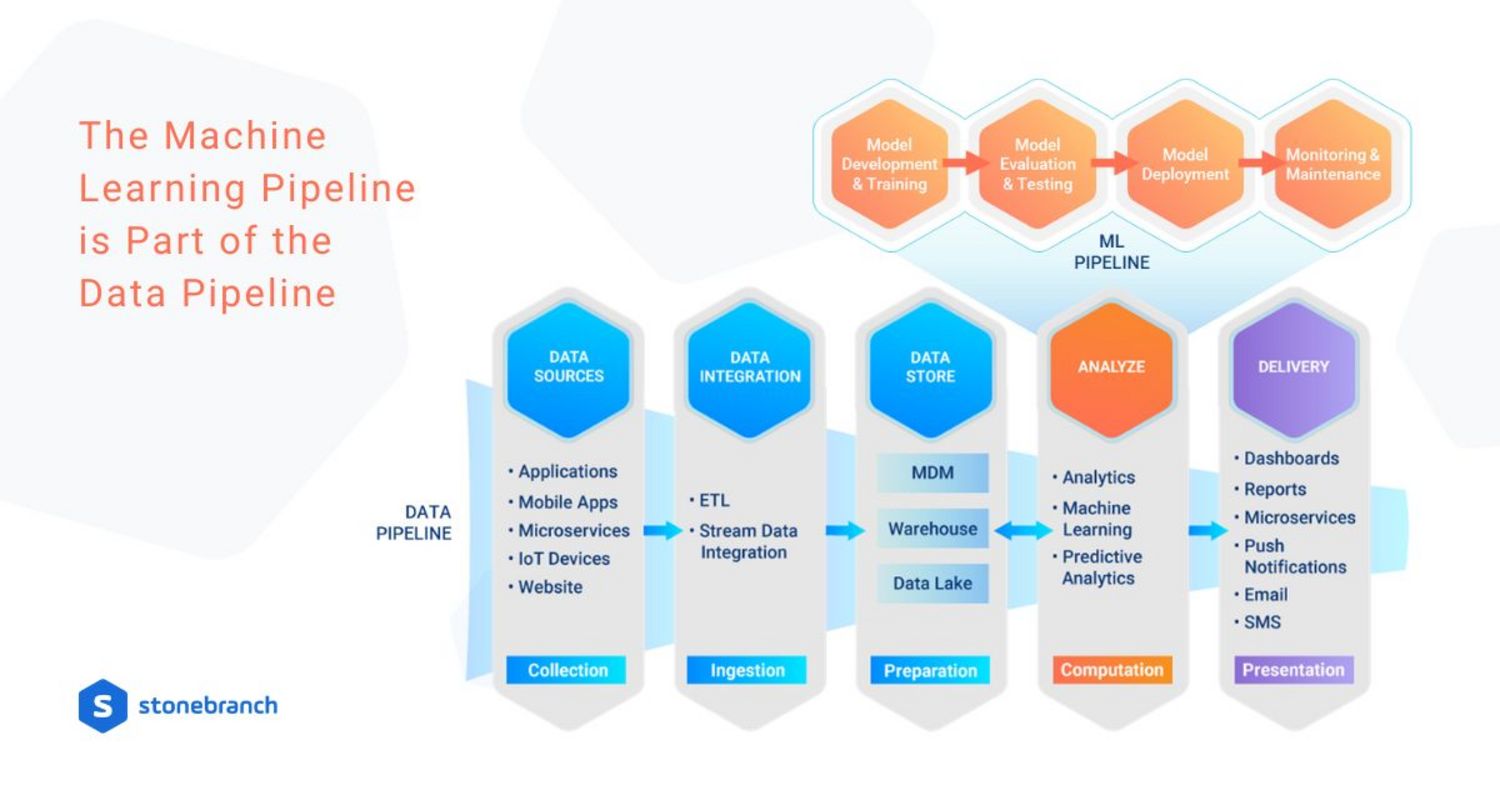

It's important to note that ML pipelines don't stand on their own. They're actually a highly specialized segment of the data pipeline — a series of five stages that move and combine data from various sources to prepare data insights for end-user consumption. The five data pipeline stages include:

- Collection from data sources

- Data integration and ingestion

- Preparation and storage

- Computation and analysis

- Delivery and presentation

Specifically, the four stages of the ML pipeline fall within the Computation and Analysis stage of the data pipeline, as shown in the diagram below.

According to TechTarget, "Seamless collaboration is an essential ingredient for successful MLOps. Although software developers and ML engineers are two of the primary stakeholders in MLOps initiatives, IT operations teams, data scientists and data engineers play significant roles as well." This description encapsulates the essence of MLOps — its emphasis on collaboration and the convergence of diverse skills required.

Automation and Orchestration: Transforming MLOps for Efficiency

Automation is the linchpin of MLOps, enabling organizations to overcome the challenges associated with manual, time-consuming processes. Through ML pipeline orchestration, organizations can achieve:

- Faster time-to-market: reducing manual interventions speeds up the entire ML lifecycle and enables organizations to deploy models faster.

- Enhanced scalability: allows organizations to handle large volumes of data and deploy models across diverse environments.

- Reduced errors and downtime: minimizes the risk of human errors, ensuring the reliability and stability of deployed ML models.

Often, individual teams will automate the specific pipeline stage they're responsible for. While this offers significant benefits, going a step further to centrally orchestrate the entire pipeline offers even more. By breaking down automation silos, MLOps automation enables:

- Improved collaboration: centralized orchestration connects the work of data scientists and operations teams to foster collaboration.

- Proactive alerts: receive real-time notifications when there's an issue, then use visual dashboards and drill-down reports to quickly find and fix the root cause.

- Enhanced observability: an advanced orchestration platform like Stonebranch Universal Automation Center (UAC) can collect telemetry data from all of the ML applications and systems it orchestrates, and send the united data set to observability tools for monitoring.

- Lifecycle management for end-to-end orchestration: manage your ML pipeline with a DevOps-like approach that supports jobs-as-code, versioning, and promotion between dev/test/prod environments.

Orchestrating the MLOps Pipeline: Best Practices

Navigating the complexities of MLOps requires a strategic approach. Effective orchestration is key to streamlining the development, deployment, and maintenance of your MLOps practice:

- Version Control for ML Models: Implement version control systems to track changes in code, data, and model configurations, ensuring reproducibility.

- Continuous Integration / Continuous Deployment (CI/CD): Apply CI/CD principles to MLOps, automating the testing, integration, and deployment of machine learning models.

- Containerization with Docker: Package ML models and their dependencies into containers, ensuring consistent and portable deployment across different environments.

- Orchestration with Tools like Stonebranch UAC: Use a service orchestration and automation platform (SOAP) to automate and schedule the execution of tasks across the entire MLOps pipeline.

- OpenTelemetry Observability: Collect metric, trace, and log data across disparate applications to track model performance, detect anomalies, and generate alerts for proactive issue resolution.

- Model Governance and Compliance Automation: Ensure that models comply with regulatory standards by automating governance processes and documentation.

Conclusion: The Future of MLOps

As organizations continue to invest in AI and machine learning, MLOps principles will play an increasingly pivotal role in realizing the full potential of these technologies. By embracing orchestration to deploy machine learning models, organizations can not only enhance efficiency and reliability but also position themselves at the forefront of innovation. As Gartner suggests, the future lies in a collaborative approach that brings together the expertise of data scientists, engineers, and business leaders to operationalize AI and drive business value. In this era of digital transformation, MLOps and orchestration are the key drivers to seamlessly integrate machine learning into everyday operations.

Start Your Automation Initiative Now

Schedule a Live Demo with a Stonebranch Solution Expert